The system includes a set of service instances. A service instance has a unique Instance Name and is made up of one or more processes.

Some service instances require that you have the applicable module licensed and enabled.

Process Name: ACCRUAL

Default Schedule: None

Module Required: Balance Accrual

The ACCRUAL service creates person balance records, adds to these balances, and performs carryover from one balance accrual period to the next. At the end of the balance period, the service will close out the old balance.

The Balance Policy determines how and when the balances should be accrued. The ACCRUAL service will run for a Balance Policy that has Auto. Accrual checked in the Accrual tab. The service uses the Accrual Ruleset attached to this Balance Policy to calculate the amount to accrue to the balance.

When the ACCRUAL service updates a person’s balance record, the update will appear in the Balance Transactions tab of the Balances form.

Note: If a balance period is based on an employee’s hire date, rehire date, or service date, and the ACCRUAL service cannot find these date values, the accrual will fail. Make sure these dates are specified in the employee’s Person record. See Effectivity tab (Balance Policy form) for more information.

Process Name: ANALYTICS

Default Schedule: None

Module Required: Analytics

The ANALYTICS service is used to calculate the data that is displayed in the KPI Dashboard and the KPI Report. The KPI Dashboard displays charts with Key Performance Indicators: Efficiency, Productivity, and Utilization. You can display this data for one or more persons. The KPI Report displays the same data in a report format.

In order to display this data, the ANALYTICS service must process Ruleset Profiles. A Ruleset Profile is assigned to specific events in a Process Policy (via the Events tab in the Process Policy form). When a person posts the event, the system check’s the person’s Process Policy to see which Ruleset Profile is assigned to the event. This Ruleset Profile will become the event’s Process Name. The ANALYTICS service (which is configured to run for specific Ruleset Profiles) will process events that also have this Ruleset Profile as their Process Name.

You can also specify which Person Groups the ANALYTICS service will process.

RULESET_PROFILE: This parameter determines the Ruleset Profiles that will be processed by the ANALYTICS service and the order in which they will be processed. Select the Ruleset Profiles you want the ANALYTICS service to process. Move the profile from the Available column to the Selected column. Use the up and down arrows to place the Selected profiles in the order you want them to be processed; the profile at the top of the list will be processed first.

Process Name: ATTENDANCE

Default Schedule: None

Module Required: Attendance Management

The ATTENDANCE service automatically posts non-labor transactions for the current date. These transactions include holiday, paid and unpaid time off, early departure, clock outs for persons who failed to clock out, short day, no-show events, and day worked for persons who are automatic or who only report elapsed transactions for exceptions. The service looks for these events in the Attendance Policy. If the event applies for that day and time, the service will post it.

In the Attendance Policy form you configure rules that determine which non-labor events can post to timecards via the ATTENDANCE service. The rules include posting holiday and penalty events, and using balances to cover penalty events. The Attendance Policy rules you create are assigned to a person or person group via the Person Setting/Person Group Setting forms. Only the events that are configured in a person's Attendance Policy will post to the person's timecard when the service runs.

For example, the service may be scheduled to run at 7 AM on June 1. The service first checks the person's Holiday Calendar. If June 1 is a holiday and the person is working the 7 AM shift, the service posts a holiday event to the person's timecard. If June 1 is not a holiday, the service checks Event Scheduling for any “auto-post” events such as vacation or scheduled time-off. The service will then post the applicable vacation or time-off events for the person. If the person is not on vacation, the service may post a tardy, day-worked, no report/no clock out, or short day event, depending on the time of day.

The ATTENDANCE service will select which dates to process based on the MODE parameter. If you want to specify another date, you can do so in the Service Parameters form. The ATTENDANCE service will post events based on the configuration in the person's Attendance Policy.

The ATTENDANCE service includes the tasks listed below. When you add or modify an instance of the ATTENDANCE service (via the Service Instance form), you can select which of these tasks the service should perform.

In order for the ATTENDANCE service to perform a task, it must be enabled in the person's Attendance Policy. For example, the service will perform the ATTENDANCE_HOLIDAY task if that task is Selected for the service instance and Enable Holiday is checked in the person's Attendance Policy Holiday record.

ATTENDANCE_HOLIDAY

The ATTENDANCE service will post holiday events based on the person’s Holiday Calendar. Note that a Holiday Calendar and a Normal Schedule must be in place in order for a Holiday event to post. See Holiday Tab – Attendance Policy for more information.

The service will first check to see if the person has a Holiday Calendar Override setting and, if so, the service will use the Holiday Calendar in that setting. If the person does not have a Holiday Calendar Override, the service will use the Holiday Calendar specified in the person’s Attendance Policy/Holiday tab.

ATTENDANCE_TIME_OFF

This task of the ATTENDANCE service will post approved time off requests to the person’s timecard, provided the person's Attendance Policy has Time Off enabled. The ATTENDANCE service will also look at the person's Attendance Policy Time Off settings to see what rules, if any, should apply to time off postings.

ATTENDANCE_DAY_WORKED

The ATTENDANCE service will post a day-worked event based on the person's Attendance Policy Day Worked settings.

AUTOMATIC_CLOCKOUT

The AUTOMATIC_CLOCKOUT task looks for a clock in without a matching clock out for each person and post date it processes. When the service finds a missing clock out, it then checks for the person's schedule on that post date.

If the person does not have a schedule, the service checks to see if the current time is after the person's last recorded activity plus the Max Missing Clk Out hours in the person's Clock Policy. If this condition is met, the service posts an automatic clock out. The timestamp of the automatic clock out will be the timestamp of the last recorded activity.

If the person does have a schedule, the ATTENDANCE service will check to see if the current time is after the scheduled end time plus the Max Missing Clk Out hours. If this condition is met, the service will post an automatic clock out. The timestamp of the automatic clock out will be based on the Sch OT ClockOut, Gap OT ClockOut, or ClockOut Time setting in the person's Clock Policy (depending on the kind of schedule the person has). For example, a person has a Normal, non-overtime schedule. In the Clock Policy, the ClockOut Time setting is At Last Activity. The ATTENDANCE service will check to see if the current time is after the scheduled end time plus the Max Missing Clk Out hours. If this condition is met, the service will post an automatic clock out with the timestamp of the last recorded activity.

When an automatic clock out is posted, you can configure a warning flag to appear in the timecard. Both the supervisor and the employee can also receive a message when an automatic clock out is posted. The warning flag and message will alert the supervisor of the automatic clock out, in case any adjustments need to be made. For example, an employee may forget to clock out and an automatic clock out posts at the employee's scheduled end time. The supervisor sees the warning on the employee's timecard and knows the employee stayed late and worked overtime that day. The supervisor is able to correct the employee's timecard accordingly.

ATTENDANCE_EARLY_DEPARTURE

The ATTENDANCE service will post an early departure event based on the person's Attendance Policy Early Departure settings.

ATTENDANCE_NO_SHOW

The ATTENDANCE service will post a no-show event based on the person's Attendance Policy No Show settings.

ATTENDANCE_SHORT_DAY

The ATTENDANCE service will post a short day event based on the person's Attendance Policy Short Day settings.

ATTENDANCE_SIGN_DAY

The ATTENDANCE service will apply employee and/or supervisor signatures to the person's timecard when the Sign Policy is set to Automatic, including gap days. If the person's Sign Policy is set to Exception, the ATTENDANCE service will sign the timecard when there is no schedule and the person has performed no sign actions (including on gap days).

DISCIPLINARY_LEVELS

This task will process the Ruleset Name specified in the Attendance Levels tab of the person’s Attendance Policy to determine a person’s point level and which supervisors will be notified of the point and level changes.

Note: Some Attendance tasks post immediately upon certain actions. A Clock In triggers several rulesets to fire:

Late Arrivals

Outside of Clocks Gap

Holidays

Vacations and Other Time Off

Day Worked if configured to Post Immediately

MODE: Determines the range of days for which the service will run.

TODAY - The service will run for the current date.

CURRENT_PAY_PERIOD - The service will run for the pay period that is in effect on the current date. The service determines a person's pay period based on their Pay Policy.

PREVIOUS_PAY_PERIOD - The service will run for the pay period before the pay period that is in effect on the current date.

NEXT_PAY_PERIOD - The service will run for the pay period after the pay period that is in effect on the current date. NEXT_PAY_PERIOD may be used to post holiday and vacation events in advance.

DATE_RANGE - The service will run for the dates in the START_DATE and END_DATE range.

GID: Group Identifier.

DID: Device Identifier.

START_DATE: If you select DATE RANGE in the MODE field, you must enter a START DATE. The START DATE specifies the first day that the service should include in the instance.

END_DATE: If you select DATE RANGE in the MODE field, you must enter an END DATE. The END DATE specifies the last day that the service should include in the instance.

Process Name: ATTENDANCE_REWARD

Default Schedule: None

Module Required: Attendance Reward

The ATTENDANCE_REWARD service uses the Attendance Reward Ruleset in the employee’s Attendance Policy to determine whether the employee has had any attendance violations or if the employee is eligible for an attendance reward.

An Attendance Reward is used to award balance hours to employees who do not have any attendance violations in a specified period of time. The award the employee receives is a specified number of hours in a balance. For example, you may want to award 4 hours of vacation time to employees who have not had any No Show, Late Arrival, or Early Departure events in the last three months.

Attendance Rewards are granted by a supervisor using the Attendance Reward form. The records in the Attendance Reward form are generated by the ATTENDANCE_REWARD service.

The ATTENDANCE_REWARD service first checks the person’s Attendance Policy to see if it includes an Attendance Reward Ruleset. If the Attendance Policy does not have an Attendance Reward Ruleset, the service will not process this person.

If the person has an Attendance Reward Ruleset, the service deletes the person’s existing eligibility and violation records. Award records are not deleted.

The service then uses the person’s Anchor Date (set on the Employee form) and the Range Amount and Range Type parameters in the Attendance Reward rules to create the eligibility and/or violation records. This range will usually begin on the date after the person’s Anchor Date and continue for the specified Range Amount and Range Type. However, if you select Calendar Months or Calendar Weeks as your Range Type, the range will begin on the start of the calendar month or week that is after the Anchor Date. For example, if the Anchor Date is 01/02/2015 and the Range Type is Calendar Months, the range will begin on 02/01/2015. If the Range Type is Months, the range will begin on 01/03/2015.

Note that if the person has no Anchor Date, the service looks at the person’s Employment Profile to find the most recent date range when the person was active. The start of that range will be used as the Anchor Date.

The service continues processing a person until the range starts later than the current day, or the range starts today or earlier, extends beyond today, and has no violations through today.

Once the service finishes running, you can use the Attendance Reward form to view the eligibility and violation records and to grant awards to eligible employees.

None

Process Name: BADGE_RESET

Default Schedule: Run every hour on the hour, indefinitely

Default Schedule Enabled: No

Module Required: Badge Management

The BADGE_RESET service looks for any Active badges that were issued to a person record at some point in time, but whose assignment is no longer valid (i.e., the end date of the record in the Badge tab of the Employee form has expired, or the record was deleted from the Badge tab of the Employee form and is no longer considered issued). These badges will get a Badge State of Available (meaning that the badge is now available for use). The updated Badge State will be reflected in the Badge tab of the Badge Group form.

Process Name: BATCH

Default Schedule: None

Module Required: None

The BATCH service is used to bundle service instances that need to be run dependently in a specified order. For example, you may need the OUT_EBS_WIP_COST service to run after LABOR_ALL_MT and RECALCULATION, so that the correct records are exported.

When the BATCH service runs, it runs the individual service instances in the batch in the order they are listed. If one of the individual service instances fails, the BATCH service will stop running. If an individual service instance has errors but is able to finish running, the BATCH will continue until all the services have run. See “View Batch Service Errors” below.

If you have individual services scheduled to run that are also included in a BATCH service instance, the individual service will stop running until the BATCH service is complete.

You need to define which service instances to include in the BATCH. You can run the BATCH service manually (in the Service Monitor form) or you can create a schedule for it.

In the Service Instance form, select the BATCH service and click Modify. Use the Instance Names parameter to select the individual services to include in the batch. Move a service from the Available column to the Selected column to include it in the batch. Use the up/down controls next to the Selected column to place the services in the order they will run (the service listed on top will run first).

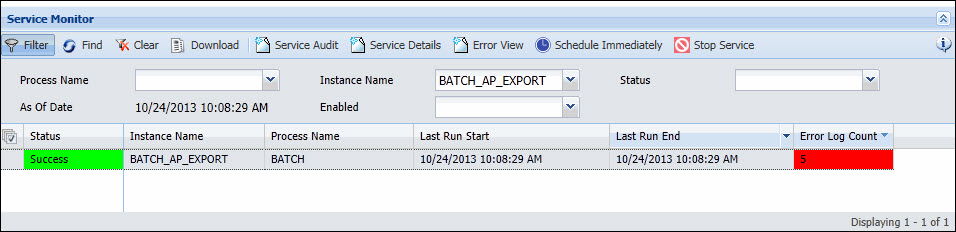

The Error Log Count column in the Service Monitor form will indicate whether the BATCH service ran with any errors. In the following illustration, the service finished running but had 5 errors.

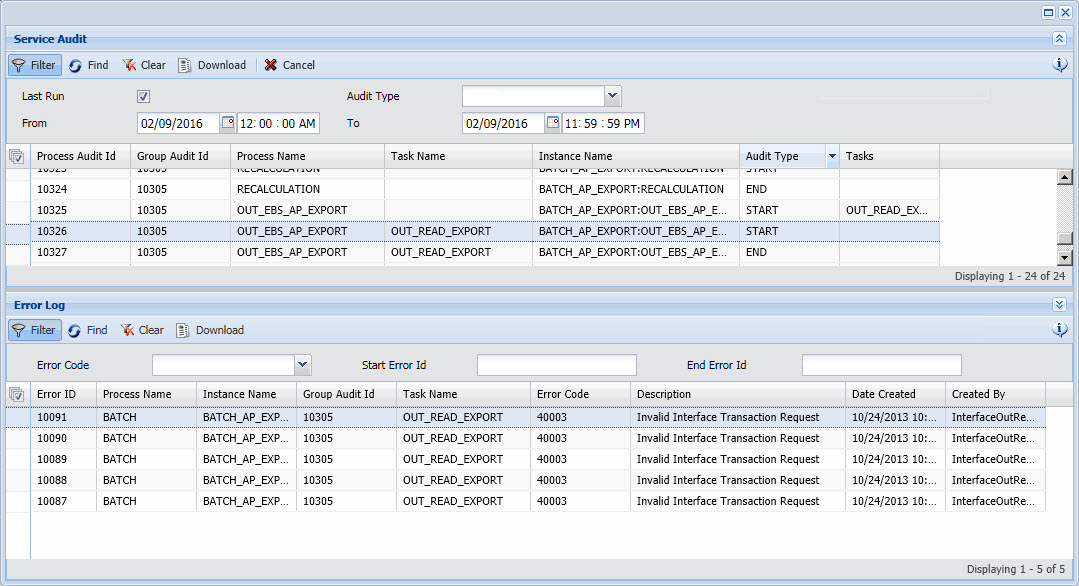

To find out which instance in the batch had the errors, use the Service Audit form. In the example below, the error occurred for the OUT_EBS_AP_EXPORT instance.

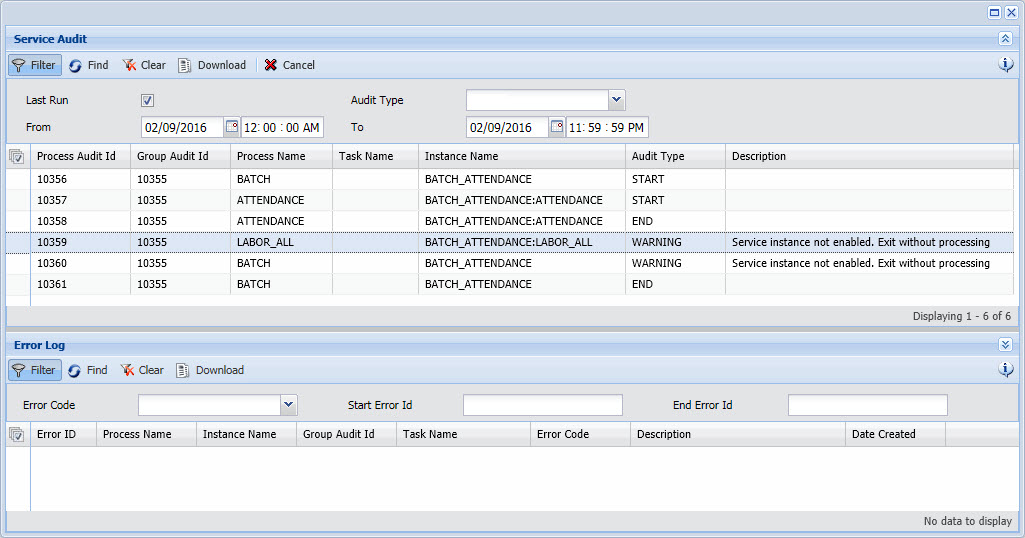

If the BATCH service fails, the Service Audit form will also show which instance in the batch caused it to stop processing. In the example below, the BATCH_ATTENDANCE service includes the ATTENDANCE, LABOR_ALL_MT, and RECALCULATION instances. The LABOR_ALL_MT instance was disabled, causing the BATCH service to stop processing.

Process Name: CALCULATE_RATES

Default Schedule: None

Module Required: Pay Rates

The Calculate Rates service will calculate the PAID_PERSON_PAY and PAID_PERSON_LABOR rates for a transaction. See Trans Rates for more information on the rates that are stored with a transaction.

The service calculates these rates based the Pay Rate Ruleset and Labor Rate Ruleset defined in the employee’s Pay Policy.

Calculate Rates is also done by the LABOR_ALL_MT service.

RATE_TYPE: Select the type of rate you want the service to calculate. Select Pay to calculate Payroll Rates (PAID_PERSON_PAY rate). Select Labor to calculate Labor Rates (PAID_PERSON_LABOR rate). Select Both to calculate both Payroll and Labor Rates.

RUN_FOR: Select POLICIES if you want to calculate rates for employees with specific Pay Policies. Make sure you specify these Pay Policies in the Selected column of the PAY_POLICY parameter. Select TRANSACTIONS if you want to calculate rates for all transactions that have not yet had their rates calculated.

PAY_POLICY: If you selected POLICIES as your RUN_FOR parameter, use this section to indicate which Pay Policies should have their rates calculated. Move the Pay Policy from Available to Selected if you want it to be processed. If there are no Pay Policies in the Selected column, the service will calculate rates for all the Pay Policies.

Process Name: CALL_STORED_PROCEDURE

Instance Name: PURGE_SQLSERVER_SEQUENCES

Default Schedule: None

This service is only needed if your Shop Floor Time database version is Microsoft SQL Server.

This service runs a stored procedure on your Shop Floor Time database that purges the identity tables in a SQL Server database (tables for each sequence that hold an identity column).The stored procedure will delete all records from all tables starting with “seq_” (except the record with the highest value ID).

How often you run this service will depend on how quickly the sequence tables are growing. For example, you may want to run this script once a week.

The CALL_STORED_PROCEDURE service has one parameter called STORED_PROCEDURE_NAME. Do not change the value of this parameter. It identifies the stored procedure that will be executed.

Process Name: CLASSIFY_MANUAL

Default Schedule: None

Module Required: None

The CLASSIFY_MANUAL service will post the appropriate hours classifications to timecard transactions for the current or previous pay period. The service will classify the timecards of employees assigned to Pay Policies that have Time Classification set to MANUAL.

The CLASSIFY_MANUAL service will only affect transactions that are not yet classified. If a transaction already has an hours classification, the CLASSIFY_MANUAL service will not change it.

Manual time classification is often used as part of the EWT/Comp Time feature. Manual time classification allows you to wait and classify the timecard after all the hours have been worked and all EWT or Comp Time has been authorized. With this method, adjustments to the timecard will not affect the hours classifications, and you will not have to reprocess the payroll export unnecessarily.

The CLASSIFY_MANUAL service allows you to classify employee timecards without waiting for a supervisor review or signature. Manual time classification can also be performed by the Classify Period button on the timecard, or the CLASSIFY Sign Trigger in a Sign Policy.

The CLASSIFY_MANUAL service should be run prior to a Payroll Export.

PERIOD_OFFSET: Indicates whether the service will classify the PREVIOUS_PAY_PERIOD or CURRENT_PAY_PERIOD. The pay period is determined based on the person’s Pay Policy that is in effect on the date the service runs.

PAY_POLICY: The service will classify the timecards of persons assigned to the Pay Policies selected in this parameter. To determine a person’s Pay Policy, the service will look at the Pay Policy assigned to the person on the date the service runs.

Move the Pay Policy from Available to Selected if you want the CLASSIFY_MANUAL service to classify timecards for persons assigned to the policy. The Available column will only display the Pay Policies that are configured for Manual time classification (Time Classification is set to MANUAL in the Pay Policy). The CLASSIFY_MANUAL service will classify timecards for the Pay Policies in the Selected column.

Process Name: COMPLETE_OFFLINE_STATE_CONTROLLER

Default Schedule: None

Module Required: Terminal Operating State

The COMPLETE_OFFLINE_STATE_CONTROLLER service will change the Operating State of the terminals that have been assigned a Terminal Off Policy. You can use this service to establish a schedule when terminals that are operating in COMPLETE_OFFLINE mode will send their offline transactions to the application server for processing. See Permanent Offline Data Collection with Scheduled Data Pull for more information.

STATE: Select the Operating State to which the service will change the terminals. The available options are ONLINE, OFFLINE_QUEUED, OFFLINE_PROCESSING, and OFFLINE_NO_PUNCH_TRANSMISSION. However, the service can only change a terminal’s Operating State to certain allowed options, depending on what the terminal’s operating state is when the service runs. See Operating State for more information.

TERMINAL_OFFLINE_POLICY: Select the Terminal Off Policies that will have their Operating State changed to the specified STATE. The Terminal Off Policy is assigned to a Terminal Profile via the Terminal Profile Setting tab. If you want this instance to apply to specific Terminal Off Policies, move the policies from the Available column to the Selected column. If no Terminal Off Policies are in the Selected column, this instance will apply to all Terminal Off Policies.

Process Name: DELETE_FILES

Default Schedule: None

Module Required: Reporting

The Delete Files Service deletes import-related files or reporting-related files based on directory name and retain days values. If the time between the creation date of the records in the given category and the current date is equal to or greater than the given retain days value, the record will be deleted.

CATEGORY: Identifies the type of files you want to delete (IMPORTS or REPORTS).

IMPORTS - Applies to backup files generated via the IMPORT_FILES service. When selected, any import data backup files located in the Shop Floor Time\import directory (that are older than the given retain_days value) will be deleted. Subdirectories are also deleted.

REPORTS - Applies to reports generated via the Reporting menu. When selected, files located in Shop Floor Time's \Reporting\birt\design\tmp directory are deleted. Subdirectories are also deleted.

RETAIN_DAYS: This value is the number of days that you want the system to compare with the creation date of the records in the given category. If the time between the creation date of the records in the given category and the current date is equal to or greater than this value, the record will be deleted. The default value is 30.

Process Name: DISCIPLINE_BALANCE

Default Schedule: None

Module Required: Discipline Balance Policy forms

The DISCIPLINE_BALANCE service is used to accumulate points to a person's Discipline Balances, apply the appropriate level to a Discipline Balance, and reduce a Discipline Balance based on the person’s Discipline Balance history. These actions are each performed by a different task of the DISCIPLINE_BALANCE service.

Discipline Balances are used to manage a person’s attendance infractions when a number of these infractions occur in a specific time period. For example, a person can earn a point for having two Unexcused Absences in 30 days. Disciplinary levels and tickets can also be issued based on these points.

The DISCIPLINE_BALANCE service uses the rulesets and settings in a person’s Discipline Balance Policy to update the person’s discipline balances. See How the DISCIPLINE_BALANCE Service Works.

The DISCIPLINE_BALANCE service’s END_POST_DATE_OFFSET parameter defines the end of the processing range. The service will run for each post date since the last run, up until the date determined by this offset.

How the DISCIPLINE_BALANCE Service Works

DISCIPLINE_BALANCE Service Tasks

DISCIPLINE_BALANCE Service Parameters

The DISCIPLINE_BALANCE service looks at a person’s Discipline Balance Policy to see what Discipline Balance Codes it includes and how they are configured. For each Discipline Balance Code, the Discipline Balance Policy has settings and rulesets that are used to accumulate the balance, change its level, or reduce it. These rulesets correspond to the DISCIPLINE_BALANCE service’s tasks. For each Discipline Balance Code, the service processes the rules for these tasks in order (BALANCE_ACCUMULATION, BALANCE_LEVELS, and BALANCE_REDUCTION).

If the person’s Discipline Balance Policy has the Run Indicator set to Run Date, the DISCIPLINE_BALANCE service will process the rulesets once per person based on the day the service runs, regardless of the last time it ran. The service uses the END_POST_DATE_OFFSET parameter to determine which date to process. For example, if today is March 25, the END_POST_DATE_OFFSET parameter is -7, and the Run Indicator is Run Date, the DISCIPLINE_BALANCE service will only process March 18.

If the person’s Discipline Balance Policy has the Run Indicator set to Each Post Date, the DISCIPLINE_BALANCE service will run for each post date since the last time the service ran (indicated by the Last Accum. Date and Last Reduction Date in the person’s Discipline Balance record), up to the date determined by the END_POST_DATE_OFFSET parameter. For example, if END_POST_DATE_OFFSET is set to -1, the service will process all the post dates from the Last Accum. Date/Last Reduction Date up until yesterday.

The service will update the person’s Discipline Balance record accordingly with any changes in level or tickets.

When an adjustment is made to a person’s timecard (a transaction is added, modified, or deleted on the timecard), and the transaction has a discipline balance record, the DISCIPLINE_BALANCE service will recalculate the person’s discipline balance, levels, and tickets.

Note that if the DISCIPLINE_BALANCE service has to process an adjusted transaction, it may take longer to run the service because it has more data to process over a longer period of time.

Example

The following illustrations explain how the DISCIPLINE_BALANCE service works.

A company has a policy that a Tardy event (Late Arrival) in the last 30 days results in 1 discipline balance point. An employee’s initial Discipline Balance is 0. However, he has tardy events on 2/23, 3/1, 3/8, and 3/15 that have not yet been processed. The person's Discipline Balance Policy has the Run Indicator set to Each Post Date.

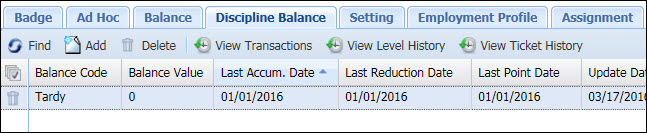

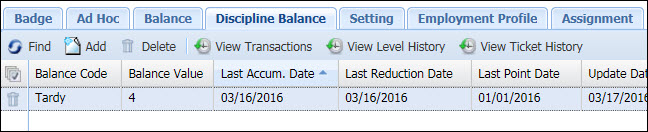

You can view the employee’s balance in the Discipline Balance tab of the Employee form.

On 3/17/2016, the DISCIPLINE_BALANCE service runs. It processes transactions from the Last Accum. Date and Last Reduction Date (1/1/2016) to the service’s END_POST_DATE_OFFSET parameter (3/16/2016).

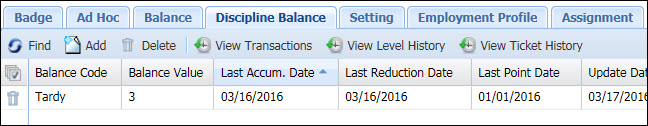

After the DISCIPLINE_BALANCE service runs, the Balance Value changes to 3.

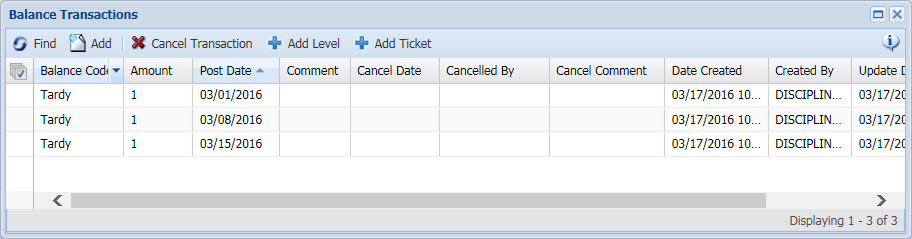

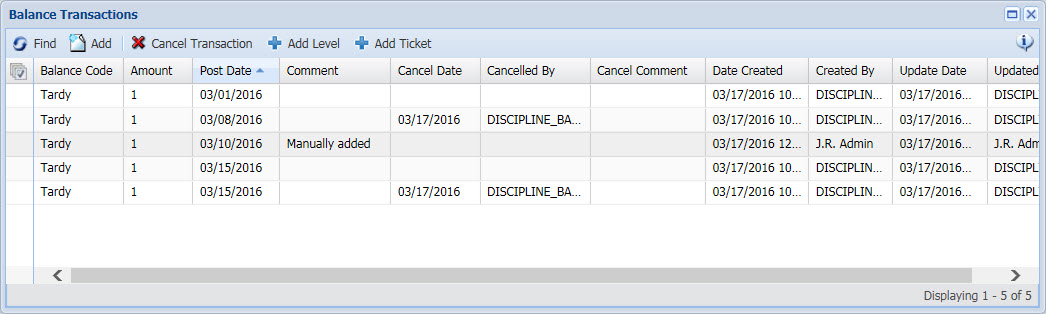

If you click View Transactions, you will see that the Balance Transactions are for the Tardy events on 3/1, 3/8, and 3/15.

The employee forgot to report he was late on 3/10/2016, so the supervisor manually adds a Balance Transaction of 1 point for this date.

The person’s Balance Value is now 4.

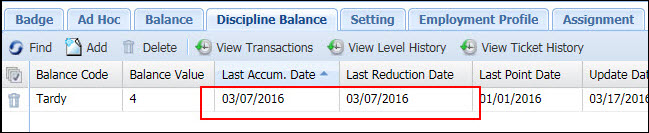

The employee tells the supervisor that the Tardy event on 3/8/2016 was an error. The supervisor adjusts the employee’s timecard for this date and the Tardy event is removed.

This adjustment causes the Last Accum. Date and Last Reduction Date in the person’s Discipline Balance record to change. They change to one day prior to the date of the adjusted transaction (in this case, 3/7/2016). These date changes will allow the DISCIPLINE_BALANCE service to reprocess the transactions to adjust for the one that was removed.

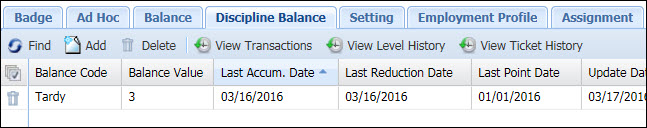

The DISCIPLINE_BALANCE service runs again. It processes records from the Last Accum. Date/Last Reduction Date of 3/7/2016 to 3/16/2016 (yesterday, as determined by the service’s END_POST_DATE_OFFSET parameter of -1). First, the service deactivates all the discipline balance transactions in this range except the ones added manually. In this case, the transactions on 3/8 and 3/15 get deactivated. Next, the service processes the same date range but this time it processes the post dates as it normally would, applying the rules and updating the balance. It is during this step that the transaction on 3/15/2016 is added back.

The employee’s Balance Value is now 3.

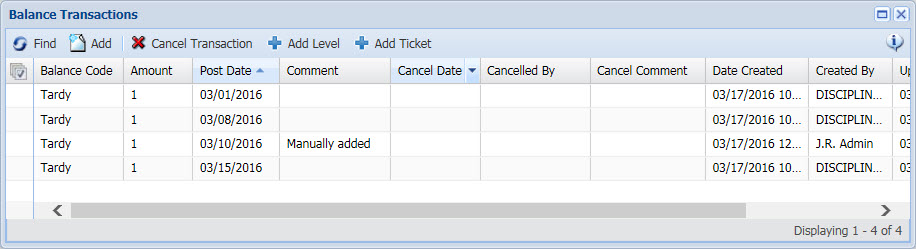

The updated Balance Transactions are shown below.

The transaction for 3/1 was not affected because it was outside the date range being processed by the DISCIPLINE_BALANCE service.

The transaction for 3/8 has been cancelled.

The transaction for 3/10 was not affected because it was added manually.

The transaction for 3/15 was cancelled but then added back.

Note that if the person’s Discipline Balance Policy has the Run Indicator set to Run Date, the DISCIPLINE_BALANCE service may not be able to reprocess timecard adjustments that affect a person’s discipline balance. For example, a supervisor removes an absence event on March 18 from a person’s timecard. This adjustment changes the Last Accum. Date and Last Reduction Date in the person’s Discipline Balance record to March 17. The DISCIPLINE_BALANCE service has an END_POST_DATE_OFFSET parameter of -1. The DISCIPLINE_BALANCE service runs on March 25. If the person’s Discipline Balance Policy has the Run Indicator set to Run Date, the service only processes records from yesterday (March 24) as determined by the END_POST_DATE_OFFSET. If the person’s Discipline Balance Policy had the Run Indicator set to Each Post Date, the service processes records from March 17 to March 24, and it can reduce the person’s discipline balance due to the timecard adjustment.

You can configure a different instance of the DISCIPLINE_BALANCE service to process each task, or you can include one or more of these tasks in a single instance. If you separate each task into its own instance, you can change the order in which the tasks run. If all the tasks are in a single instance, they will run in the following order: BALANCE_ACCUMULATION, BALANCE_LEVELS, and BALANCE_REDUCTION.

BALANCE_ACCUMULATION

This task processes the Discipline Ruleset in the Discipline Balance Policy. This task looks at the person’s timecard to find any violations (e.g., unexcused absences or late arrivals) and adds the appropriate number of points to the Discipline Balance Code.

BALANCE_LEVELS

This task processes the Level Ruleset Name in the Discipline Balance Policy. This task looks at the amount of points in a person’s Discipline Balance and determines what level, if any, to assign it.

BALANCE_REDUCTION

This task processes the Reduction Ruleset in the Discipline Balance Policy. This task examines a person’s Discipline Balance history to determine if the balance can be reduced.

END_POST_DATE_OFFSET: This parameter defines the end of the processing range. A value of 0 means today. The default value is -1 (yesterday), giving supervisors a chance to make adjustments to the current day before the DISCIPLINE_BALANCE service processes it.

The Run Indicator in the person’s Discipline Balance Policy determines how the END_POST_DATE_OFFSET is used.

If the person’s Discipline Balance Policy has the Run Indicator set to Run Date, the service uses the END_POST_DATE_OFFSET to determine which date to process. For example, if today is March 25, the END_POST_DATE_OFFSET is -7, and the Run Indicator is Run Date, the DISCIPLINE_BALANCE service will only process March 18.

If the person’s Discipline Balance Policy has the Run Indicator set to Each Post Date, the DISCIPLINE_BALANCE service will run for each post date since the last time the service ran (indicated by the Last Accum. Date and Last Reduction Date in the person’s Discipline Balance record), up to the date determined by the END_POST_DATE_OFFSET. For example, if END_POST_DATE_OFFSET is -1, the service will process all the post dates from the Last Accum. Date/Last Reduction Date up until yesterday.

Process Name: EXCEPTION_MESSAGE_CREATION

Default Schedule: None

Module Required: Messaging

The EXCEPTION_MESSAGE_CREATION service creates messages when there is an “exception” with a service instance (e.g., the service has an error or runs too long) or when a particular Error Code is reported in the Error Log. These messages are created based on rules assigned to a Message Definition in the service’s parameter.

You must use the EXCEPTION_MESSAGE_CREATION service’s MESSAGE_NAME parameter to define which Message Definitions will be used when creating the messages.

You can configure different instances of the EXCEPTION_MESSAGE_CREATION service to create specific message types. For example, you can configure an instance specifically for service audit errors.

To create an Exception message, the EXCEPTION_MESSAGE_CREATION service processes the Message Definitions in its parameter. For example, if the service is processing the SERVICE_AUDIT_ERROR message, it fires the ServiceAuditErrorRuleset. This ruleset checks to see if the ATTENDANCE service (or any service configured in the rule) had any errors in the last 5 hours and if so, a message is sent to a Message Group.

MESSAGE_NAME: This parameter defines the Message Definitions for which the service will generate messages. The available options are Message Definitions with the EXCEPTION Message Type.

Move the policies from the Available column to the Selected column to enable them. The EXCEPTION_MESSAGE_CREATION service will only generate messages based on the Selected Message Definitions. If no options are in the Selected column, the service will not process any of them.

Process Name: EXPIRE_OFFERS

Default Schedule: None

Module Required: Overtime Offer and Response Service and Forms

The EXPIRE_OFFERS service updates overtime offers that have a status of Offered or Acknowledged and have expired per the Cutoff Date field in the OT Offer and OT Response forms. The service changes the offer status to Refused After Cutoff. Note that offers with a status of Refused After Cutoff can still be accepted (the status will be Accepted After Cutoff).

Process Name: EXPORT

Default Schedule: None

Module Required: Export

The EXPORT service can be used to run a specific Export Definition. The Export Definition you select will determine the type of data that will be exported, how the data will be formatted, and the destination for the exported data (file system, queue, or table).

You can schedule the EXPORT service to run automatically (via the Service Schedule tab) or you can run the service instance manually via the Service Monitor form.

Note: You can also run exports from the Exports form, provided the Export Destination is FILE or TABLE. When you run an export from the Exports form, you can include data that is entered in user-defined fields when you run the export. This data will not be included when you run the export via the EXPORT service instance. You can also specify whether to include Previously Exported Records and download exports that have already been generated.

The OUT_CONVERT and OUT_TEXT_CONVERT tasks are used when the Export Definition has Export Destination set to QUEUE. These tasks will transfer the export data from the Out XML Queue to the Interface Out Queue.

OUT_CONVERT: Select this Task if the Export Definition has Export Destination set to QUEUE and the Export Type is BCOREXML or XML. This Task takes the export data from the Out XML Queue and places it in the Interface Out Queue.

OUT_TEXT_CONVERT: Select this Task if the Export Definition has Export Destination set to QUEUE and the Export Type is CSV, CSV With Header, Fixed Length, or JSON. This Task takes the export data from the Out XML Queue and places it in the Interface Out Queue.

If the Export Definition has Export Destination set to QUEUE and neither of these Tasks are selected, the data will only be sent to the Out XML Queue.

Make sure you also configure the INTERFACE_NAME, TRANSACTION_NAME, and SENDER_NAME parameters so the data can be exported to the Interface Out Queue.

MODE: The MODE parameter determines the posting date of the records that will be exported. Options are ALL, TODAY, and DATE RANGE.

ALL causes the service to export all unprocessed records (i.e., records with a status of Ready) with the current date, a prior date, or a future date.

TODAY causes the service to export all unprocessed records (i.e., records with a status of Ready) up until today.

DATE RANGE causes the service to export all unprocessed records (i.e., records with a status of Ready) in a specific date range. If you select DATE RANGE, you must also specify a START DATE and END DATE.

START_DATE, END_DATE: If you select DATE RANGE as your MODE parameter, you must select the START_DATE and END_DATE of the date range of the records to export.

EXPORT_DEFINITION: Identifies which Exports record the instance should run for. Exports records are created in the Export form.

EXPORT_CREATOR: Identifies who the export belongs to. The default value is Admin User. Only the Export Creator has access to the output file in the Output form.

INTERFACE_NAME, TRANSACTION_NAME, and SENDER_NAME: If the EXPORT_DEFINITION you selected (above) has its Export Destination set to QUEUE, you need to set the INTERFACE_NAME, TRANSACTION_NAME, and SENDER_NAME parameters. Otherwise, an error will occur when you run the EXPORT service. You do not need to set these parameters if your EXPORT_DEFINITION has its Export Destination set to FILE or TABLE. These parameters are used to populate values in the Interface Out Queue when the export data is moved from the Out XML Queue to the Interface Out Queue. You need to select values for these parameters that match ones in the Distribution Model form; otherwise an error will occur when you try to run the service.

The POST_DATE parameter in your Export Definition is used to restrict the transactions that will be exported based on their Post Date. For example, you may want to prevent transactions with a Post Date in the future from being exported.

Make sure the MODE parameter for the EXPORT service coincides with the Export Definition’s POST_DATE parameter. If these settings are different, then the more restrictive setting will be used.

Process Name: IMPORT_FILES

Default Schedule: None

Module Required: Import Data

The Import Files Service applies to the Import Data Feature. The service reads data from the source you indicate in all of the enabled Import records (in the Import Definition form) and converts it to XML records in the application. The raw XML records can be viewed in the In XML Queue Detail form. The service also populates the tables that apply to the Context Names (i.e., Transaction Names) you define, such as PERSON and PERSON GROUP. For example, when source data is mapped to the PERSON context, the service imports the data and populates the person table.

The IMPORT_FILES service applies to the "Import" interface record.

IN_CONVERT: Converts the source data to XML format. The raw XML records can be viewed in the In XML Queue Detail form.

IN_XML_PROCESS: Populates the appropriate tables with the source data that was converted to XML format.

LOAD TYPE: You can choose to run an Incremental Load or Full Load.

Incremental Load: The service will add data to the applicable tables and update any records where existing mandatory field name data matches new mandatory field name data. An example of mandatory field name data is FI_FIRST _NAME and FI_PERSON_NUM for Person.

Full Load: The service will update all applicable tables and make non-matching records inactive. A record is considered non-matching when the mandatory field name data in the existing record does not correspond with mandatory field name data in any of the source data.

Note: Full Load is not supported for Person Group. If you select Full Load, the service will run Incremental Load for Person Group records.

IMPORT_NAME: The service will run only for the Import Names listed in the Selected box. The Import Names are created and configured in the Import Definition form.

SENDER_NAME: Identifies the sender of the data. Available options are Senders defined in the Interface Host form. The value selected here will populate the Sender Name column in the tables that are populated by the service instance. This value will also be used to populate the Sender Name column in the Distribution Model (interface_distribution_model) table.

TRANSACTION_GROUP: Transaction group is used to group transaction names. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface and Interface Trans forms.

Note: IMPORT is the default/valid transaction group value for the Import interface.

Process Name: IN_AT7

Default Schedule: Run every day at 1 AM, indefinitely

Default Schedule Enabled: No

Module Required: AutoTime Interfaces

The IN AT7 Service downloads data from the In XML Queue.

IN_CONVERT: Converts the source data to XML format. The raw XML records can be viewed in the In XML Queue Detail form.

IN_XML_PROCESS: Populates the appropriate tables with the source data that was converted to XML format.

Process Name: IN_DB_POLLING

Instance Name: IN_BAAN

Default Schedule: None

Module Required: None

Shop Floor Time uses a combination of database triggers and database polling to import charge element data from Baan. Triggers are used to detect add, update and delete actions on the Baan master tables. These triggers write to corresponding autotime_interface_tables created on the Baan database. If a record is added to or updated in the actual table (e.g., ttisfc001100), then a record gets written in the ttisfc001_interface table. When a charge element is deleted in Baan, a record is placed in the autotime_interface_delete table. The IN_BAAN service will poll these interface tables to find the charge elements to import or delete.

IN_DB_POLL: Polls the Baan database to find the data that can be downloaded.

IN_CONVERT: Converts the source data to XML format. The raw XML records can be viewed in the In XML Queue Detail form.

IN_XML_PROCESS: Populates the appropriate tables with the source data that was converted to XML format.

SENDER_NAME: Identifies the sender of the data. Select the name of the Baan instance you defined in the Interface Host form. Available options are Senders defined in the Interface Host form.

TRANSACTION_GROUP: A Transaction Group is used to group Transaction Names for a particular Interface. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface Trans tab of the Interface form.

The IN_BAAN service will poll the Baan interface tables to find charge elements to import or delete. The service uses its SENDER_NAME and TRANSACTION_GROUP parameters to look up the Import Name on the Distribution Model. This Import Name specifies the charge elements to import or delete.

Process Name: IN_ORACLE_EBS

Default Schedule: Run every day at 1 AM, indefinitely

Default Schedule Enabled: No

Module Required: AutoTime Interfaces

Receives Order, Operation, and Activity data from your external ERP.

IN_DB_POLL: Polls the Oracle EBS database to find the data that can be downloaded.

IN_CONVERT: Converts the source data to XML format. The raw XML records can be viewed in the In XML Queue Detail form.

IN_XML_PROCESS: Populates the appropriate tables with the source data that was converted to XML format.

SENDER_NAME: The value entered here will populate the Sender Name column in the tables that are populated by the service instance. The default value is ORACLE_EBS.

TRANSACTION_GROUP: Select the Transaction Group that will be processed by this instance of the IN_ORACLE_EBS service. For example, select ORACLE_EBS_PROJECT if you want this instance of the IN_ORACLE_EBS service to download projects and tasks from Oracle EBS.

If you do not select a TRANSACTION_GROUP, the service will attempt to process all the available Transaction Groups. It is therefore recommended that you create a separate instance of the IN_ORACLE_EBS service for each Transaction Group you want to process.

A Transaction Group is used to group transaction names. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface and Interface Trans forms.

Process Name: IN_QUEUE_TEXT

Instance Name: IN_AUTOTIME6

Default Schedule: None

Module Required: None

The IN_QUEUE_TEXT service is used to import AutoTime 6 API records into Shop Floor Time.

The service will take the AutoTime 6 API records from the interface_in_queue table (which can be viewed in the Interface In Queue form) and convert the records to a format that Shop Floor Time can read. It then places this data in the In XML Queue form (interface_in_xml_queue table). Finally, the IN_QUEUE_TEXT service imports the AutoTime 6 API data from the interface_in_xml_queue table into the appropriate Shop Floor Time tables.

Before running the IN_QUEUE_TEXT service, you will need to place your AutoTime 6 API records in the interface_in_queue table. See AutoTime 6 API Support for more information.

IN_TEXT_CONVERT: Converts the API data that is in the interface_in_queue table (Interface In Queue form) into a format that Shop Floor Time can read. The service will only process Interface In Queue records that have a Process Name of CONVERT_TEXT. It then places this data in the interface_in_xml_queue table (In XML Queue form).

Note: Before running the IN_QUEUE_TEXT service, you will need to place your AutoTime 6 API records in the interface_in_queue table. See AutoTime 6 API Support for more information.

IN_XML_PROCESS: Imports the AutoTime 6 API data from the interface_in_xml_queue table into the appropriate Shop Floor Time tables. To do so, the service uses its SENDER_NAME and TRANSACTION_GROUP parameters to look up a record in the Distribution Model that will indicate the Import Name to use.

SENDER_NAME: Identifies the sender of the data (in this case, AUTOTIME6). Available options are Senders defined in the Interface Host form.

TRANSACTION_GROUP: A Transaction Group is used to group Transaction Names for a particular Interface (in this case, AUTOTIME6). The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface Trans tab of the Interface form.

The IN_QUEUE_TEXT service uses its SENDER_NAME and TRANSACTION_GROUP parameters to look up a record in the Distribution Model that will indicate the Import Name to use. The Import Name, defined in the Import Definition form, defines the API source data that will be imported from the interface_in_queue table.

Process Name: IN_SAP

Default Schedule: None

Module Required: SAP Interfaces

The IN_SAP service is used to import data from SAP. For more information, see SAP Interface.

You must create an instance of the IN_SAP service for each type of IDOC you are importing.

Shop Floor Time includes the pre-defined instance of the IN_SAP service shown below. It is recommended that you copy the system-defined service instances and use the duplicate versions, which can be modified as necessary.

IN_SAP_HRCC1DNWBSEL

IN_SAP_HRCC1DNCOSTC

IN_SAP_HRCC1DNINORD

IN_SAP_HRMD_A

IN_SAP_OPERA2

IN_SAP_OPERA3

IN_SAP_OPERA4

IN_SAP_WORKC2

IN_SAP_WORKC3

IN_SAP_WORKC4

The SAP Listener receives an IDOC from SAP and places the data in the Interface In Queue form (interface_in_queue table).

The IN_SAP service will convert the IDOC data that is in the Interface In Queue to a format that Shop Floor Time can read. It then places this data in the In XML Queue form (interface_in_xml_queue table).

Finally, the IN_SAP service will import the data from the In XML Queue to the appropriate Shop Floor Time table. To do so, the IN_SAP service uses its SENDER_NAME and TRANSACTION_GROUP parameters to look up a record in the Distribution Model that will indicate the Import Name to use. The Import Name, defined in the Import Definition form, defines the source data that will be imported from the In XML Queue to the appropriate Shop Floor Time table.

Note: To look up the record in the Distribution Model, the IN_SAP service first checks to see if the Interface In Queue record has a Transaction Name Alias. If the record does have a Transaction Name Alias, the IN_SAP service will use this alias to look up the record in the Distribution Model. If the record does not have a Transaction Name Alias, or if the record’s Transaction Name Alias does not match any records in the Distribution Model, the service will look up the record based on the Transaction Name.

IN_CONVERT: Converts the source data to XML format. The raw XML records can be viewed in the In XML Queue Detail form.

IN_XML_PROCESS: Populates the appropriate tables with the source data that was converted to XML format.

SENDER_NAME: Identifies the sender of the data. Select the name of the SAP system you defined in the Interface Host form. Available options are Receivers defined in the Interface Host form. Set this value to the name of the SAP system you defined in the Interface Host form.

TRANSACTION_GROUP: A Transaction Group is used to group Transaction Names for a particular Interface. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface Trans tab of the Interface form.

The IN_SAP service uses its SENDER_NAME and TRANSACTION_GROUP parameters to look up a record in the Distribution Model that will indicate the Import Name to use. The Import Name, defined in the Import Definition form, defines the source data that will be imported from the interface_in_queue tables into the charge_element table.

Each predefined instance of the IN_SAP service has its TRANSACTION_GROUP parameter set to the appropriate setting for the record being imported. In the Interface Trans tab of the Interface form, there is a Transaction Group defined for each of these Transactions. For example, the Transaction Name OPERA2 has the Transaction Group OPERA2.

Note: To look up the record in the Distribution Model, the IN_SAP service first checks to see if the Interface In Queue record has a Transaction Name Alias. If the record does have a Transaction Name Alias, the IN_SAP service will use this alias to look up the record in the Distribution Model. If the record does not have a Transaction Name Alias, or if the record’s Transaction Name Alias does not match any records in the Distribution Model, the service will look up the record based on the Transaction Name.

Process Name: LABOR_ALL

Default Schedule: Run every day at 1 AM, indefinitely

Default Schedule Enabled: No

Module Required: AutoTime Interfaces

The LABOR_ALL service performs the tasks listed below. The service will perform each task on a single person, then process the next person.

Generate Supporting Events

The LABOR_ALL service posts the following events:

Scheduled Events (defined in the Event tab of the Person Schedule form)

Inside Gap Events (defined by the Gap Event Name in the employee's Pay Policy)

Late Arrival and Outside Gap events as defined in the employee's Attendance Policy

Holiday, Time Off, and Day Worked events as defined in the employee's Attendance Policy only if the employee clocks in on days that these events are to post. If the employee does not clock in, the Attendance service posts these events.

Early Departure events as defined in the employee's Attendance Policy only if the event is configured to post Immediately upon clock out.

Pair Formation

The LABOR_ALL service forms transaction pairs based on the values in the Action table. For example, if the Action table has a CLOCK_IN, then a MEAL_START, the Pair Formation service would create the action pair CLOCK_IN MEAL_START as one record.

When you post an event directly on the timecard, the Pair Formation service runs automatically. It is not necessary to run the service in order to update the timecard.

Labor Split

The LABOR_ALL service searches the trans_atomic table for closed atomic records that have crossed an action, a schedule, a shift, or a day boundary and splits the record at the boundary. The rounded timestamp will be use in the creation and splitting of atomics. The trans_atomic table gets updated accordingly. For every atomic record created during the splitting, a new record is created in the trans_atomic_duration table.

Time Classification

The LABOR_ALL service posts the appropriate hours classifications on the timecard.

Distribute Labor

The LABOR_ALL service distributes labor atomics and adds time distribution to transactions. The amounts/values (e.g., number of hours, hours classification, and amounts going toward daily and weekly overtime) are displayed in the Transaction Duration tab of the Transaction Details form.

Calculate Rates

The LABOR_ALL service will calculate the PAID_PERSON_PAY and PAID_PERSON_LABOR rates for a transaction. See Transaction Rate for more information on the rates that are stored with a transaction.

The service calculates these rates based the Pay Rate Ruleset and Labor Rate Ruleset defined in the employee’s Pay Policy.

The Calculate Rates task can also be configured as a separate service instance.

Distribute Labor Details

The LABOR_ALL service calculates transaction details such as shift premiums and hours class premiums. The amounts/values are displayed in the Transaction Duration Detail tab of the Transaction Details form. This information is stored in the trans_action_duration_dtl table.

Process Name: LABOR_ALL_MT

Default Schedule: Run every day at 1 AM, indefinitely

Default Schedule Enabled: No

Module Required: AutoTime Interfaces

The LABOR_ALL_MT (Multi Task) service performs the tasks listed below. The service will perform each task on a single person, then process the next person.

Generate Supporting Events

The LABOR_ALL_MT service posts the following events:

Scheduled Events (defined in the Event tab of the Person Schedule form)

Inside Gap Events (defined by the Gap Event Name in the employee's Pay Policy)

Late Arrival and Outside Gap events as defined in the employee's Attendance Policy

Holiday, Time Off, and Day Worked events as defined in the employee's Attendance Policy only if the employee clocks in on days that these events are to post. If the employee does not clock in, the Attendance service posts these events.

Early Departure events as defined in the employee's Attendance Policy only if the event is configured to post Immediately upon clock out.

Pair Formation

The LABOR_ALL_MT service forms transaction pairs based on the values in the Action table. For example, if the Action table has a CLOCK_IN, then a MEAL_START, the Pair Formation service would create the action pair CLOCK_IN MEAL_START as one record.

When you post an event directly on the timecard, the Pair Formation service runs automatically. It is not necessary to run the service in order to update the timecard.

Labor Split

The LABOR_ALL_MT service searches the trans_atomic table for closed atomic records that have crossed an action, a schedule, a shift, or a day boundary and splits the record at the boundary. The rounded timestamp will be use in the creation and splitting of atomics. The trans_atomic table gets updated accordingly. For every atomic record created during the splitting, a new record is created in the trans_atomic_duration table.

Time Classification

The LABOR_ALL_MT service posts the appropriate hours classifications on the timecard.

Distribute Labor

The LABOR_ALL_MT service distributes labor atomics and adds time distribution to transactions. The amounts/values (e.g., number of hours, hours classification, and amounts going toward daily and weekly overtime) are displayed in the Transaction Duration tab of the Transaction Details form.

Calculate Rates

The LABOR_ALL_MT service will calculate the PAID_PERSON_PAY and PAID_PERSON_LABOR rates for a transaction. See Transaction Rate for more information on the rates that are stored with a transaction.

The service calculates these rates based the Pay Rate Ruleset and Labor Rate Ruleset defined in the employee’s Pay Policy.

The Calculate Rates task can also be configured as a separate service instance.

Distribute Labor Details

The LABOR_ALL_MT service calculates transaction details such as shift premiums and hours class premiums. The amounts/values are displayed in the Transaction Duration Detail tab of the Transaction Details form. This information is stored in the trans_action_duration_dtl table.

Process Name: MESSAGE_CREATION

Default Schedule: None

Module Required: Messaging

The MESSAGE_CREATION service generates Dialog and System messages. The messages are generated based on rules in the Message Definitions and Message Policies that are assigned to a person.

You must use the service’s MESSAGE_POLICY and MESSAGE_NAME parameters to define which Message Policies and Message Definitions will be used when generating the messages.

You can configure different instances of the MESSAGE_CREATION service to generate specific message types. For example, you can configure an instance specifically for unsigned time card messages.

To create a System Message, the MESSAGE_CREATION service looks at the persons who are assigned to the Message Policy and Message Definition in the service's parameters. The service then processes the rulesets in the Message Definitions. For example, if the MESSAGE_CREATION service is processing the UNSIGNED_TIMECARD_REMINDER Message Definition, it fires the UnsignedTimecardReminderRuleset. This ruleset checks the person's time card in the current pay period and if this time card is unsigned and it is the last scheduled day of the period, a message is sent to the person reminding them to sign their time card.

To create a Dialog Message, the MESSAGE_CREATION service processes records for persons with the Message Policy/Message Definition specified in the service’s parameters. If a Message Definition for a Dialog message is included, the service will generate the dialog message accordingly. The Dialog Message's Message Definition settings will determine if the message displays after login or after a specific event is selected on the terminal.

The MESSAGE_NAMES (Message Definitions) you select must be included in one of the selected MESSAGE_POLICIES in order for the service to create messages based on the definition.

For example, you may have one Message Policy that contains both the Unsigned Time Card Reminder and Unsigned Time Card Warning Message Definitions. You want to configure one instance of the MESSAGE_CREATION service to process the Unsigned Time Card Reminder messages, and another instance to process the Unsigned Time Card Warning messages. Both instances will have the same MESSAGE_POLICY parameter, but one instance will have the Unsigned Time Card Reminder MESSAGE_NAME and one instance will have the Unsigned Time Card Warning MESSAGE_NAME.

MESSAGE_POLICY: Defines the Message Policies for which the service will generate messages. Move the policies from the Available column to the Selected column to enable them. The MESSAGE_CREATION service will only generate messages for persons assigned to these Message Policies. If no Message Policies are in the Selected column, this instance will not generate any Dialog or System messages.

MESSAGE_NAME: Defines the Message Definitions for which the service will generate messages. The available options are Message Definitions with the DIALOG or SYSTEM Message Type. Move the policies from the Available column to the Selected column to enable them. The MESSAGE_CREATION service will only generate messages based on the selected Message Definitions.

Process Name: MESSAGE_DELIVERY

Default Schedule: Run every hour, indefinitely

Default Schedule Enabled: No

Module Required: Messaging

The MESSAGE_DELIVERY service delivers email messages.

You can send Broadcast, Trigger, System, and Exception messages via email.

Broadcast Messages: The Send Email box must be checked. You can define the subject, header, message text, and trailer of the email message when you create the broadcast message.

Trigger Messages: The SEND_EMAIL_TO_USER and/or SEND_EMAIL_TO_MANAGER Trigger Settings must be True.

System and Exception Messages: The Create Message operands in the Message Definition’s ruleset must include Email in the Medium parameter.

The MESSAGE_DELIVERY service also sends email messages regarding terminal status changes. These messages are generated by the TERMINAL_MONITOR service. Note that the terminals you want to monitor must have the Monitor box checked in the Terminal form.

You must configure your system’s settings in order to send email. To receive an email message, a person must have an e-mail address defined on the Employee form.

RETRY_DELIVERY

When an email message delivery fails (for example, if the recipient does not have an email address defined in their Person record), an error log record is created. The RETRY_DELIVERY parameter indicates whether the MESSAGE_DELIVERY service will continue trying to send the email each time the service runs.

The default setting is TRUE, meaning the MESSAGE_DELIVERY service will continue trying to send the email each time the service runs. The message will still be marked as Ready even after the error log record is created. If the message delivery continues to fail, a new error log record will be created for each failed attempt.

If you change this setting to False, the message will be marked as Error after the first failed message delivery. The MESSAGE_DELIVERY service will no longer try to send the email when the service runs.

Process Name: OFFLINE_DATA_PROCESSOR

Default Schedule: None

Module Required: None

Sometimes terminals lose connectivity with the application server and go offline. When a terminal is offline, employees can continue to punch transactions and the terminal will store the data in offline files.

Once the connectivity to the application server is restored and the terminal is back online, the offline files are moved to the terminal_offline_queue table. You can view these files in the Offline Data Queue form.

The OFFLINE_DATA_PROCESSOR service moves the data from the terminal_offline_queue table to the terminal_offline_queue_dtl table. You can view these records in the Offline Data Records form. The records will be sorted and processed in the order of their transaction timestamps. If a transaction is successfully processed, its status will be C (Complete).

Some terminals with offline data will not go back online until the OFFLINE_DATA_PROCESSOR service runs (in order to ensure the time sequence integrity of the transactions). The status of these terminals will remain as Offline - Processing Offline Data until the service runs.

Note: If a terminal is a member of a Terminal Group, the service will process the offline records of all the terminals in a Terminal Group when the Terminal Group's status is Offline Processing or Online, regardless of the status of the individual group members. If the Terminal Group's status is Offline Queuing, the service will not process the offline records of any terminals in the group, regardless of the status of the individual group members. See Configuring Shop Floor Time to Use Multiple Data Collection Systems for more information.

The Offline Data Queue form contains the offline data file that has been downloaded from the terminal to the application server. Each offline data file may contain multiple transactions; you can view these transactions in the Offline Data tab at the bottom of the Offline Data Queue form. The Offline Data Queue form displays the data in the terminal_offline_queue table.

The Offline Data Records form contains individual transactions from each of the processed files in the Offline Data Queue form. These transactions have been processed by the OFFLINE_DATA_PROCESSOR service. This data is stored in the terminal_offline_queue_dtl table.

The Offline Records tab in the Offline Data Records form displays the actual content of each transaction. The Offline Record Error tab in the Offline Data Records form displays details about offline data records with the Record Status of Error.

If the OFFLINE_DATA_PROCESSOR service encounters an error when processing the offline data (e.g., an employee enters an invalid work order number), the service will record the error in the Error Log. You can view this error in the Offline Record Error tab of the Offline Data Records form.

A message can also be sent to the person whose offline transaction caused the error, this person's supervisor, and a system administrator. The message will contain details about the error, where it occurred, and when it occurred.

To create these messages, you will need to make sure the EXCEPTION_MESSAGE_CREATION service is configured to process the ERROR_LOGGED_BY_OFFLN_DATA_PROC_EXCPTN Message Definition. This Message Definition uses a Messaging Ruleset to create messages for the person and the supervisor. You will need to create a rule and add it to the ruleset for this Message Definition.

The OFFLINE_DATA_PROCESSOR service will process records in the terminal_offline_queue table in the order of their timestamps. You can use the GID, DID, and TERMINAL_PROFILE parameters to configure the service to process these records for specific terminals or a Terminal Profile

GID, DID

GID is the terminal's Group ID number and DID is the terminal's Device ID number. These values are defined in the Terminal form.

If you want this instance of the OFFLINE_DATA_PROCESSOR service to only process the offline data of a specific terminal, enter the terminal's GID and DID.

If you specify only a GID or a DID, then this instance will process the offline data of all terminals with the specified GID or DID.

TERMINAL_PROFILE

If you want this instance of the OFFLINE_DATA_PROCESSOR service to only process the offline data of terminals with a specific Terminal Profile, move the Terminal Profile from the Available column to the Selected column.

If no Terminal Profiles are in the Selected column, and there is no GID/DID specified, this instance of the OFFLINE_DATA_PROCESSOR service will process all the offline data records in the terminal_offline_queue table.

Process Name: OUT_EXPORT_TABLE

Instance Name: OUT_BAAN_HRA

Default Schedule: None

Module Required: None

The OUT_BAAN_HRA service exports work order transactions to the HRA staging table in Baan. This service selects transactions with a specific Sender Name, which is determined by a person’s Process Policy. Baan function servers then update the core Baan tables with the HRA records. The function servers then write a success or error message to a Baan table, and Shop Floor Time imports this error information to update the transaction and the error log.

SENDER_NAME: Identifies the sender of the data. Select the name of the Baan instance you defined in the Interface Host form. Available options are Receivers defined in the Interface Host form.

TRANSACTION_GROUP: A Transaction Group is used to group Transaction Names for a particular Interface. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface Trans tab of the Interface form.

EXPORT_NAME: Export Definition that will be used by the service.

Process Name: OUT_EXPORT_TABLE

Instance Name: OUT_BAAN_HRS

Default Schedule: None

Module Required: None

The OUT_BAAN_HRS service exports project transactions to the HRS staging table in Baan. This service selects transactions with a specific Sender Name, which is determined by a person’s Process Policy. Baan function servers then update the core Baan tables with the HRS records. The function servers then write a success or error message to a Baan table, and Shop Floor Time imports this error information to update the transaction and the error log.

SENDER_NAME: Identifies the sender of the data. Select the name of the Baan instance you defined in the Interface Host form. Available options are Receivers defined in the Interface Host form.

TRANSACTION_GROUP: A Transaction Group is used to group Transaction Names for a particular Interface. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface Trans tab of the Interface form.

EXPORT_NAME: Export Definition that will be used by the service.

Process Name: OUT_EXPORT_TABLE

Instance Name: OUT_BAAN_SFC

Default Schedule: None

Module Required: None

The OUT_BAAN_SFC service will export project and financial transactions to the TTMSFC100 table in Baan.

SENDER_NAME: Identifies the sender of the data. Select the name of the Baan instance you defined in the Interface Host form. Available options are Receivers defined in the Interface Host form.

TRANSACTION_GROUP: A Transaction Group is used to group Transaction Names for a particular Interface. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface Trans tab of the Interface form.

EXPORT_NAME: Export Definition that will be used by the service.

Process Name: OUT_COSTPOINTSF

Instance Name: OUT_COSTPOINTSF

Default Schedule: Run every 5 minutes, indefinitely

Default Schedule Enabled: Yes

Module Required: Costpoint Shop Floor Core

The OUT_COSTPOINTSF Service sends transaction data to Costpoint.

A separate instance of the OUT_COSTPOINTSF service must be configured for each Costpoint system that will be sent data. The service’s SENDER_NAME parameter is used to identify the Costpoint system.

OUT_READ_EXPORT: Reads data from the transaction tables.

OUT_CONVERT: Takes data from Out XML Queue and puts it in the Interface Out Queue.

OUT_SEND_WEB_SERVICE: Sends the data to Costpoint.

SENDER_NAME: The Interface Name that is configured with the destination Costpoint server. Posted transactions will be sent to this Costpoint server. Identifies the sender of the data. Select the name of the Costpoint system you defined in the Interface Host form. Available options are Receivers defined in the Interface Host form.

TRANSACTION_GROUP: Transaction group is used to group transaction names. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface and Interface Trans forms.

NUMBER_PARALLEL_SEND: Number of interface records to send simultaneously.

Process Name: OUT_EBS_AP_EXPORT

Default Schedule: None

Module Required: AutoTime Interfaces

The OUT_EBS_AP_EXPORT service can be used to run an AP Export for a specific Export Definition. The service will export any records that have been Payroll Locked and have not been previously exported. You can also define the number of days prior to the day the service runs for which the service will export records.

You can schedule the OUT_EBS_AP_EXPORT service to run automatically or you can run the service instance manually via the Service Monitor form.

You can also run an AP Export manually from the Exports form.

OUT_READ_EXPORT: Reads data from the transaction tables.

OFFSET_DAYS: Number of days prior to the day the service runs for which the service will export records. For example, if OFFSET_DAYS is set to 7 and the service runs on 5/17/2013, the service will export records from 5/11/2013 to 5/17/2013. The default value is 0, meaning the service will only export records with the current date (the date the service runs).

SENDER_NAME: Indicates which external system will receive the exported data for this instance of the OUT_EBS_AP_EXPORT Service. Available options are Receivers defined in Interface Host form.

TRANSACTION_GROUP: Transaction group is used to group transaction names. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface and Interface Trans forms.

EXPORT_NAME: Identifies the Export Definition for which this instance should be run. Available options are Export Definitions defined in the Export form.

MODE: Select TODAY to export transactions with the current date or prior. Select ALL to export transactions with the current date, a prior date, or a future date.

The POST_DATE parameter in your Export Definition is used to restrict the transactions that will be exported based on their Post Date. For example, you may want to prevent transactions with a Post Date in the future from being exported.

Make sure the MODE parameter for the OUT_EBS_AP_EXPORT service is the same as the Export Definition’s POST_DATE parameter. If these settings are different, then the more restrictive setting will be used.

If the service’s MODE is TODAY, the Export Definition’s POST_DATE parameter should be set to Current. If the service’s MODE is ALL, then the Export Definition’s POST_DATE parameter should also be set to All.

Process Name: OUT_EBS_AP_INVOICE

Default Schedule: None

Module Required: AutoTime Interfaces

The OUT_EBS_AP_INVOICE service should be run after the OUT_EBS_AP_EXPORT service. The OUT_EBS_AP_INVOICE service executes a stored procedure in your Oracle EBS database. This stored procedure takes the data that was exported from Shop Floor Time to Oracle EBS (by the OUT_EBS_AP_EXPORT service) and moves this data to Oracle EBS tables that store invoice data.

You can schedule the OUT_EBS_AP_INVOICE service to run automatically or you can run the service instance manually via the Service Monitor form.

OUT_EXECUTE_STORED_PROC: Executes a stored procedure (specified in the STORED_PROCEDURE_NAME parameter) in your Oracle EBS database that takes the data that was exported from Shop Floor Time to Oracle EBS (by the OUT_EBS_AP_EXPORT service) and moves this data to Oracle EBS tables that store invoice data.

SENDER_NAME: Indicates which external system will receive the exported data for this instance of the OUT_EBS_AP_INVOICE Service. Available options are Receivers defined in Interface Host form.

STORED_PROCEDURE_NAME: Name of the stored procedure that is executed by this service The default stored procedure, ORACLE_EBS_AP_INVOICE.sql, is located in the \db directory where Shop Floor Time is installed (\db\sql\scripts\schema\ORACLE\ERP\ORACLE_EBS).

Process Name: OUT_EBS_GL_EXPORT

Default Schedule: None

Module Required: AutoTime Interfaces

The OUT_EBS_GL_EXPORT service can be used to run a GL Export.

The OUT_EBS_GL_EXPORT service uses its SENDER_NAME and TRANSACTION_GROUP parameters to look up a record in the Distribution Model that will indicate the Export Name to use.

You can schedule the OUT_EBS_GL_EXPORT service to run automatically or you can run the service instance manually via the Service Monitor form. You can also run a GL Export manually from the Exports form.

The OUT_EBS_GL_EXPORT service will only export transactions with the Sender Name specified in the service’s parameter. To ensure your transactions have the correct Sender Name, make sure you configure your Process Policy correctly. This service instance should be included as a Process Name in your Process Policy (via the Process or Event tab) and the Sender Name you specify for this service instance should be assigned to that Process Name.

OUT_READ_EXPORT: Reads data from the transaction tables.

SENDER_NAME: Indicates which external system will receive the exported data for this instance of the OUT_EBS_GL_EXPORT Service. Available options are Receivers defined in Interface Host form.

TRANSACTION_GROUP: Transaction group is used to group transaction names. The table columns that are mapped to the Transaction Names that use the Transaction Group will be populated when the service runs. You can view the available Transaction Names and Transaction Groups for each interface in the Interface and Interface Trans forms.

MODE: Select TODAY to export transactions with the current date or prior. Select ALL to export transactions with the current date, a prior date, or a future date.